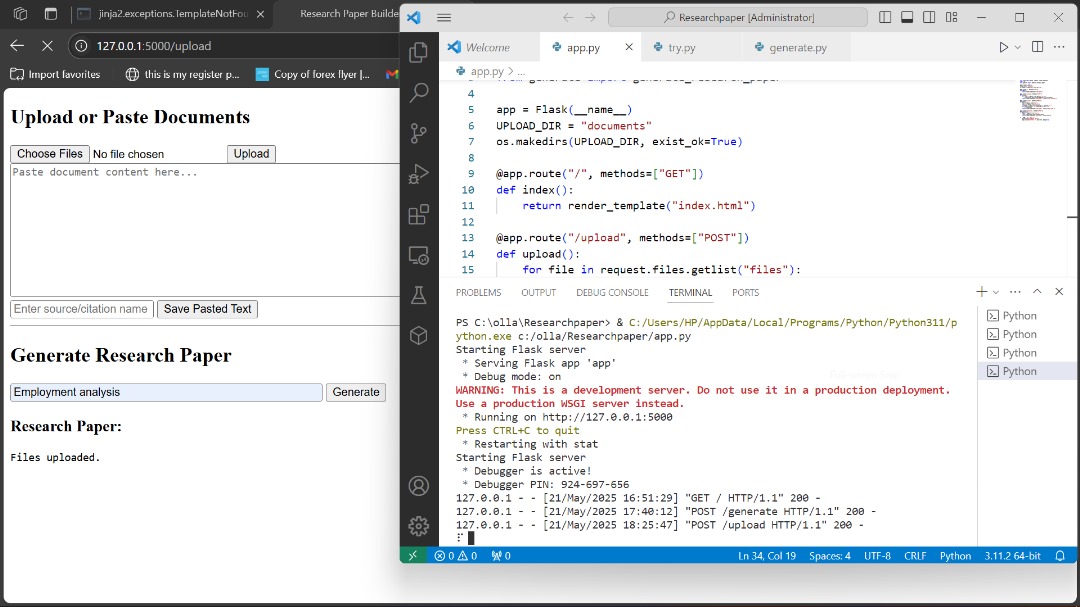

Building an AI Research Paper Generator with Flask + Ollama

In a world where artificial intelligence (AI) is reshaping how we work, learn, and create, I decided to take a practical step: build a custom research paper generator that can work offline and generate complete academic write-ups using locally stored documents as references.

This project combines the simplicity of Flask, the power of Python, and the efficiency of Ollama’s LLMs (like Mistral) to automate research paper writing — structured, cited, and ready for review.

Why I Built This

I’ve always been intrigued by the challenge of making AI useful beyond chat applications. As someone involved in academia and research, I’ve seen how time-consuming it can be to draft well-organized research papers. With this tool, I wanted to make it easier to:

Use AI without relying on internet access

Maintain full control over reference materials

Cite documents automatically during generation

Produce research papers in a standard academic format

How It Works

Here’s the high-level workflow of the system:

- Upload or Paste Content: You can upload .txt files or paste text directly into the app.

- Enter a Topic: Input the research topic you want the paper to be about.

- Generate the Paper: The app prompts the AI model (Mistral via Ollama) to generate a full paper using only your supplied documents.

- Structured Output: You get a paper that includes:

- Title

- Abstract

- Introduction

- Literature Review

- Methodology

- Expected Results

- Conclusion

In-text source references like (Source: filename.txt)

Tech Stack

Flask: A lightweight web framework to build the user interface and handle uploads/forms.

Python: For file processing and subprocess management.

Ollama: To run local language models like Mistral — ideal for offline use.

HTML/Jinja: For templating the interface.

Challenges I Faced

Encoding Issues: Some uploaded documents had special characters that caused errors during reading. I solved this by detecting file encoding and ensuring compatibility with UTF-8.

Subprocess Management: Interfacing Python with Ollama’s command-line interface required precise handling to ensure prompts and outputs were transferred smoothly.

Error Handling: Ensured robust error feedback for file uploads and generation failures.

Why This Matters

This isn’t just a weekend hack — it’s a real productivity tool for:

- Students writing term papers

- Researchers looking to summarize findings

- Content creators who want structured, sourced content fast

- Developers exploring offline GenAI tools

Most importantly, it’s privacy-first and works completely offline. Your data stays with you.

What’s Next?

I plan to:

Add PDF and DOCX export

Build a version that supports multiple models

Create a desktop app version using Flask + PyInstaller or Electron

You can follow my journey or request access to the GitHub repo soon.

Final Thoughts

Building this project reminded me how powerful and accessible AI is becoming — especially when you bring it closer to real-world use cases like academic writing. I hope this inspires others to explore how GenAI can be adapted to solve niche but important problems.

Let’s connect if you’re interested in:

GenAI applications

Academic automation

Building tools with Ollama and LLMs

This is Awesome

A great idea which can serve as a great solutions for research work among students and others

Hi there to all, for the reason that I am genuinely keen of reading this website’s post to be updated on a regular basis. It carries pleasant stuff.

I like the efforts you have put in this, regards for all the great content.

Awesome! Its genuinely remarkable post, I have got much clear idea regarding from this post

I really like reading through a post that can make men and women think. Also, thank you for allowing me to comment!

Awesome! Its genuinely remarkable post, I have got much clear idea regarding from this post

Thank you for sharing such a well-structured and easy-to-digest post. It’s not always easy to find content that strikes the right balance between informative and engaging, but this piece really delivered. I appreciated how each section built on the last without overwhelming the reader. Even though I’ve come across similar topics before, the way you presented the information here made it more approachable. I’ll definitely be returning to this as a reference point. It’s the kind of post that’s genuinely helpful no matter your level of experience with the subject. Looking forward to reading more of your work—keep it up! profis-vor-ort.de

I appreciate how you compared different approaches — very helpful.

I very delighted to find this internet site on bing, just what I was searching for as well saved to fav